Solar LLM Rises in the East via AWS SageMaker Jumpstart

2024/04/04 | Written By: Eujeong Choi (Technical Writer)Today, we are excited to introduce the foundation model made by Upstage, “Solar”. Solar is a large language model (LLM) 100% pre-trained with Amazon SageMaker that outperforms and outspeeds market leaders. Solar leverages its compact size and powerful track records to specialize in purpose-training, making it versatile across languages, domains and tasks.

In this post, we demonstrate our text generation model Solar’s strengths and the journey of training and uploading this model on the SageMaker platform.

Now you can use Solar within the Amazon SageMaker JumpStart to build powerful, purpose-trained LLMs yourself.

About Upstage and its LLM Solar

Upstage is an AI startup that boosts work efficiency with industry-leading document processing engines and large-language models (LLMs). Aiming for AGI for work, Upstage plans to automate simple tasks with “document AI” and enhance work productivity with LLM “Solar”.

In December 2023, Solar made waves by reaching the pinnacle of the Open LLM Leaderboard of Hugging Face. Using notably fewer parameters, Solar delivers responses comparable to GPT-3.5, but is 2.5 times faster. Let me guide you through how Solar revolutionized the downsizing of LLM models without sacrificing its performance.

Upstage LLM ‘Solar’ is the top-performer amongst <13B open-source models in HuggingFace

Along with topping the Open LLM Leaderboard of Hugging face, Solar outperform GPT-4 with purpose-trained models on certain domains and tasks.

Solar LLM outperforms GPT4 with purpose-trained models

1. Building Solar with AWS Sagemaker

We navigated the entire building process with the help of Amazon SageMaker. This fully managed machine learning service enabled us to rapidly construct, train, and deploy ML models into a production-ready hosted environment. In comparison to the infrastructures previously utilized by Upstage, AWS SageMaker demonstrated a performance enhancement, being approximately twice as fast during the training phase and 3.5 to 5 times quicker in the deployment stage.

2. Fundamental Architecture

The foundational architecture of Solar is based on a 32-layer Llama 2 structure, and is initialized with pre-trained weights from Mistral 7B, one of the best-performing models compatible with the Llama 2 architecture.

3. Depth Up-scaling (DUS)

How did Solar stay compact, yet become remarkably powerful? Our scaling method ‘depth up-scaling’ (DUS) consists of depthwise scaling and continued pretraining. DUS allows for a much more straightforward and efficient enlargement of smaller models than other scaling methods such as mixture-of-experts.

Unlike Mixture of Experts (MoE), DUS doesn’t need complex changes. We don’t need additional modules or dynamism; DUS is immediately compatible with easy-to-use LLM frameworks such as HuggingFace, and is applicable to all transformer architectures. (Read paper → )

Use Solar with Components

Why do we need components?

What concerns you most about implementing LLMs in your business? For many, the primary worry is the unreliability of LLMs due to their propensity for hallucinations. How can we rely on LLMs to provide an adequate output when their responses often seem arbitrary?

Upstage supports RAG to ground your answers with data and Groundedness Check to double check your answers. But, for you to have this hallucination-proof system up and working, you need your data to be LLM-ready first. With our Layout Analysis, we transform your unstructured data to be well-understood by LLMs. This is fed into our LLM Solar along with RAG and Groundedness Check, making your data really work for you.

2. About Layout Analysis

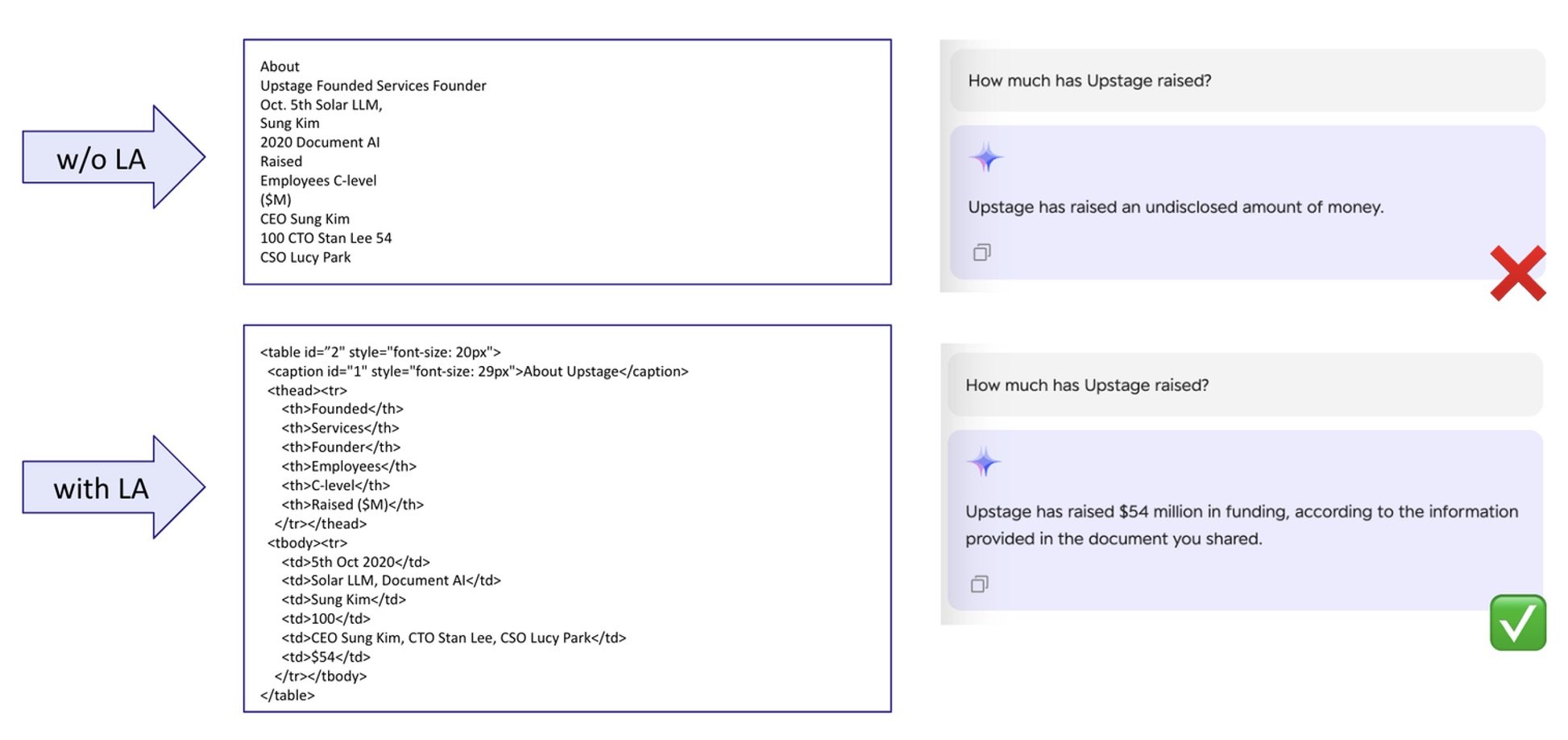

Creating a trustworthy dataset for a sturdy reference text is crucial, especially to ensure that answers are accurately grounded in your data.

Here is an example of a hypothetical random document about Upstage.

How can you transform your unstructured, PDF formatted document to be the ground truth for LLM to answer upon? Would OCR (Optical Character Recognition) provide sufficient information for the language model?

Recognizing the layout of your document is essential for the LLM to accurately interpret your data. Simply conducting OCR misses the implicit information communicated through the document's layout, like tables and formatting. Therefore, a holistic approach that encompasses layout analysis is crucial to thoroughly prepare your data for LLM integration.

The deep learning mantra "Garbage in, garbage out" emphasizes the importance of data quality provided to the language models. To make your documents ready for LLM, our Layout Analysis will be an invaluable tool.

3. About RAG

With the LLM-readied data made through Layout Analysis, we embed your own data into an embedding model. By converting text data into vector form, we establish a vector database, preparing the ground for the retriever to source information pertinent to the user's query.

4. About Groundedness Check

Groundedness Check significantly bolsters the system's reliability as the final step. It validates that the output from LLM aligns with the content from the reference document before it is delivered to the user, thus guaranteeing the precision of the provided information. This critical step considerably lowers the chance of hallucination to nearly zero.

Unlock the full potential of 'Solar' at SageMaker JumpStart

To get started with Solar models, you can access Solar Mini through two key AWS services: "Amazon SageMaker JumpStart", a machine learning hub offering Solar Mini as a pre-built foundation model for easy integration into applications, and "AWS Marketplace", a curated software catalog that simplifies deployment of Solar Mini.

With Solar LLM, you can easily build a customizable generative AI service without the need to create your own models. Check out our introduction video below on using Solar with Amazon SageMaker JumpStart!