Your Ontology Changes Every Quarter. Your Automation Shouldn't.

Last quarter, your insurance forms had 47 fields. This quarter? 63. Next quarter? New products, new regulations, new data points. Ontology changes faster than any IT sprint.

Here's the problem : Most document automation treats data structures as permanent. Build it once, use it forever. But in the real world:

- New insurance riders add fields overnight

- Regulations split one field into three

- Mergers introduce different terminology

- Product launches require new data points

Traditional automation breaks every time. IT rebuilds the system. Operations waits. Documents pile up. Manual processing fills the gap.

Can your automation keep up?

The Upstage Difference: Built for Change

We designed an extraction system that adapts to your data structure instantly, whether it's today's, tomorrow's, or the one after your next merger.

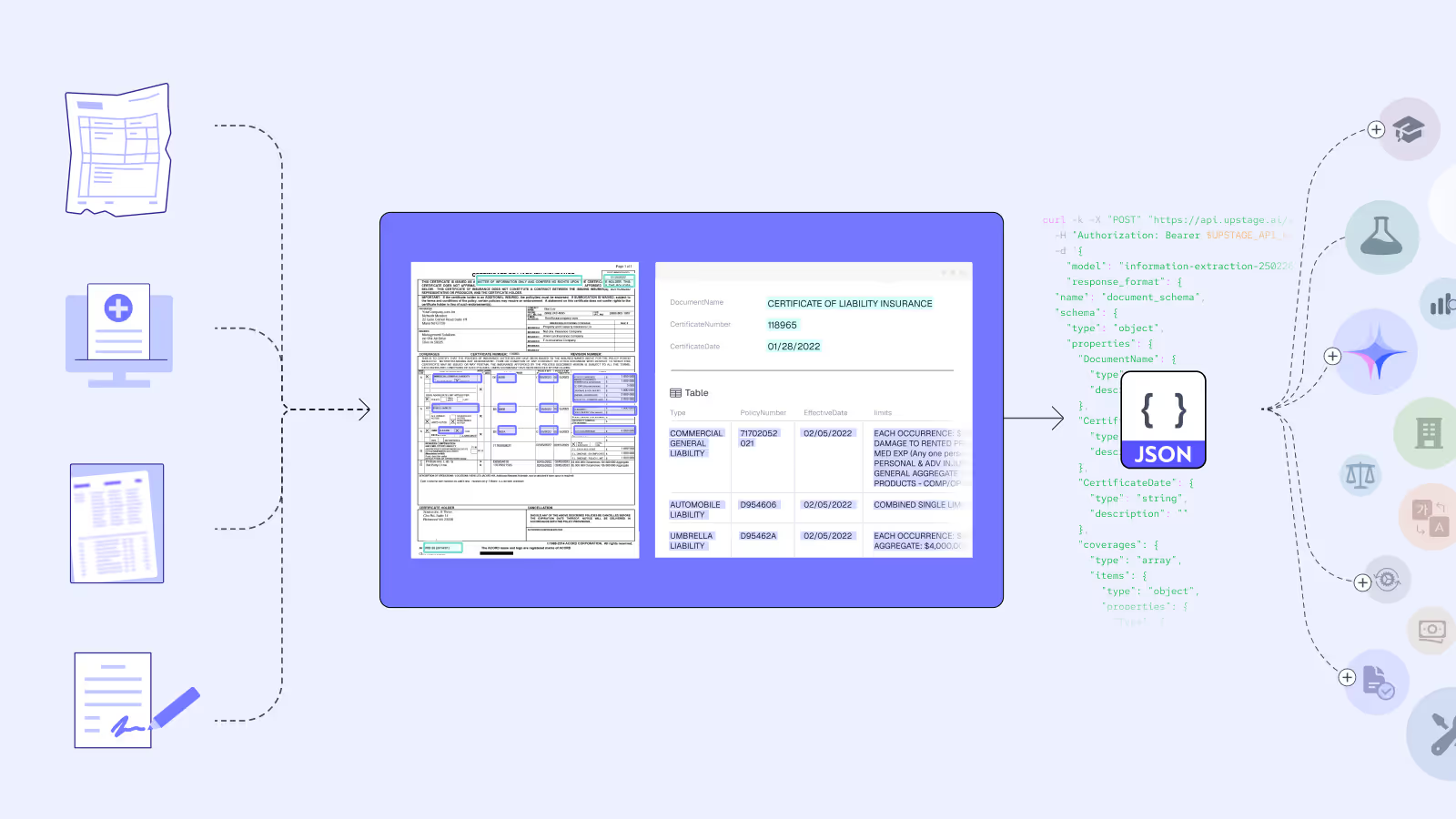

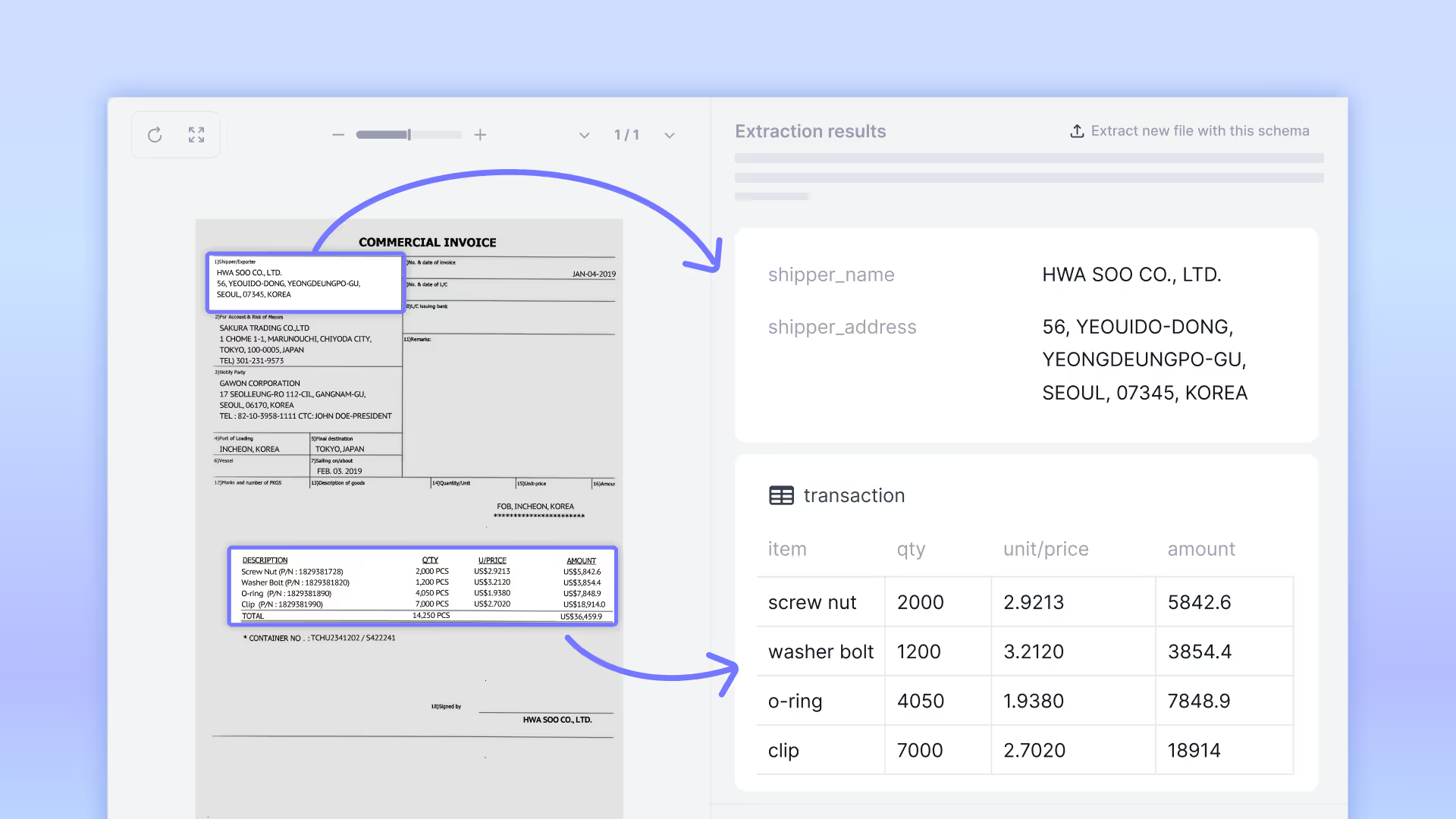

Extract Any Schema with Information Extract

Schema as configuration, not trained model. Add fields, update descriptions, deploy in minutes. When your insurance forms go from 47 to 63 fields, you update the schema. No retraining. No IT sprints.

Quick deployment:

- 3-minute auto schema generation. Upload 10 documents, AI suggests fields, review, deploy. 80% accuracy out of the box

- Few-shot examples for terminology. Add 2-3 examples for new terms. +6.65% accuracy improvement in internal tests

Built-in validation:

- Confidence scores: Low-confidence values flagged for review. Focus effort where it matters

- Source tracing: Check any value's location (page, coordinates) in the original document

- Auto-improvement : Errors feed back to update schema and propose improvements

Prerequisite: Any document format (PDF, scans, forms) is normalized through Document Parse, so format changes never break extraction.

Q: What if 50 new fields are added overnight?A: Update schema, validate sample batch, deploy. It just take few minutes.

Real Impact you can gain with Upstage Approach

Scenario 1 : New Insurance Product Launch

The Situation:

HealthCare Insurance is launching a new chronic care insurance plan on Friday. The operations team found out on Tuesday. The problem? Their document extraction system can't handle the new application form.

What changed in the application form:

The old application had 47 fields. The new chronic care plan requires 63 fields, which are 16 brand-new fields that the system has never seen before, such as:

Telehealth Provider Network= Which online doctors can the customer see?Care Coordinator Assignment= Which nurse will manage their care plan?Genetic Screening Consen= Does the customer agree to DNA testing?- ...and 13 more new fields

The pressure: 2,847 applications are already waiting to be processed. Without extracting these new fields, customers can't enroll. Marketing already emailed 50,000 people. Friday launch is non-negotiable.

How to handle this? Two approaches:

Scenario 2 : Regulatory Compliance Update

The Situation:

Tuesday morning, 9 AM: A new state privacy regulation takes effect. Immediately. No grace period. Your extraction system is now processing applications illegally.

What changed in the regulation:

Before today, insurance applications had one consent checkbox:

☑ Patient Consent = "I consent to use of my information"

Starting today, the law requires three separate consent checkboxes on every application:

☑ Treatment Consent = Can we share your info with doctors?

☑ Data Sharing Consent = Can we share with partner insurers?

☑ Research Consent = Can we use your data for medical studies?

The pressure: Your system extracts one "Patient Consent" value (yes/no). Now you need to extract three separate values from three different checkboxes. You have applications being processed right now. Legal says you must be compliant by end of day or stop processing entirely.

How to become compliant? Two approaches:

What Your Team Gains with Upstage

Build for Tomorrow’s Changes, Not Just Today’s Tasks

Your data structure will change. New products. New regulations. New acquisitions. The only certainty is change. The real question is : will your automation survive the shift or fall behind?

Upstage’s pipeline adapts to any schema,

- the one you have today,

- the one you'll need tomorrow,

- the next ten after your merger.

Ready to future-proof your document automation?

Contact us at contactus@upstage.ai or try demo and see how Information Extract, and AI Space adapt to your schema changes in hours, not months.

Your ontology changes. Your pipeline adapts. Your business keeps moving.