Upstage’s AI Space eliminates hallucinations by grounding every output directly in the source document. When data isn’t found, it clearly states “not present,” delivering traceable, evidence-cited answers with domain-tuned models trusted by Fortune 500 firms for high-stakes, document-based accuracy.

Why Healthcare and Insurance Industries Choose Hallucination-Free AI

General LLMs can infer plausible but wrong answers. Upstage returns source-cited results and explicitly says “not present” when the document lacks the information.

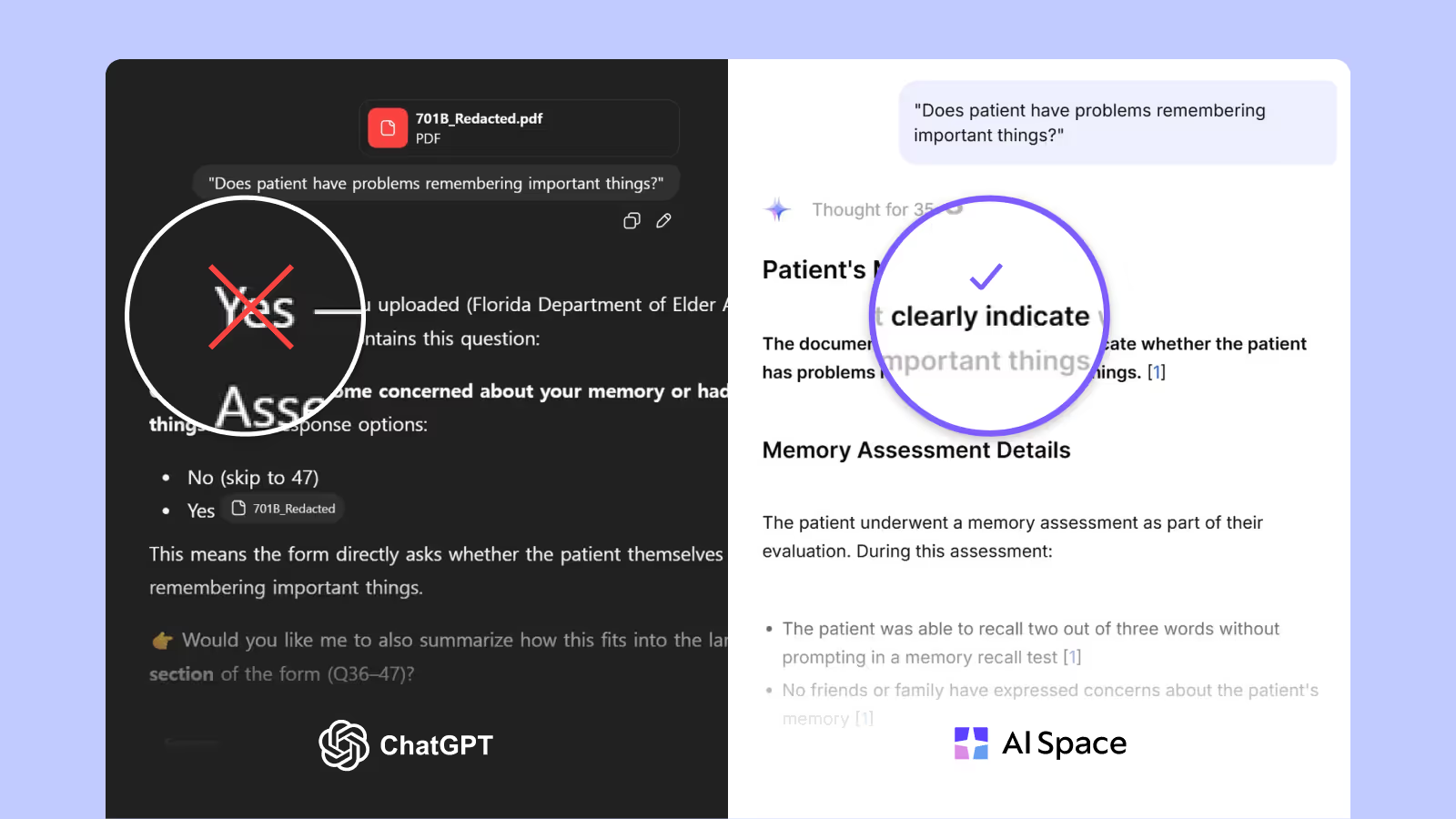

We asked the same question to ChatGPT and AI Space.

"Does patient have problems remembering important things?"

❌ ChatGPT: "Yes"

✅ Upstage AI Space : “It’s not clear.”

When we checked the actual document? Question 45 had no checkmark at all.

What if medical professionals create treatment plans based on wrong information? What if insurers process claims with incorrect data? This is the dangerous reality of AI "hallucination."

Results must be grounded in the document, not a guess.

Hallucination occurs when AI generates information that doesn't exist in the source material, presenting it as fact. While this might be acceptable in casual conversations, it becomes catastrophic in healthcare and insurance environments.

Traditional LLMs generate "plausible" responses based on learned patterns. Document-based AI, however, only responds with information that actually exists in the document. This difference creates dramatically different outcomes. Wrong diagnostic information leads to inappropriate treatment. Incorrect insurance data results in improper claim processing. The cost? Legal liability and damaged institutional trust.

Upstage AI Space: "If It's Not in the Document, We Say So"

Q. How do you eliminate hallucination in AI for healthcare and insurance?

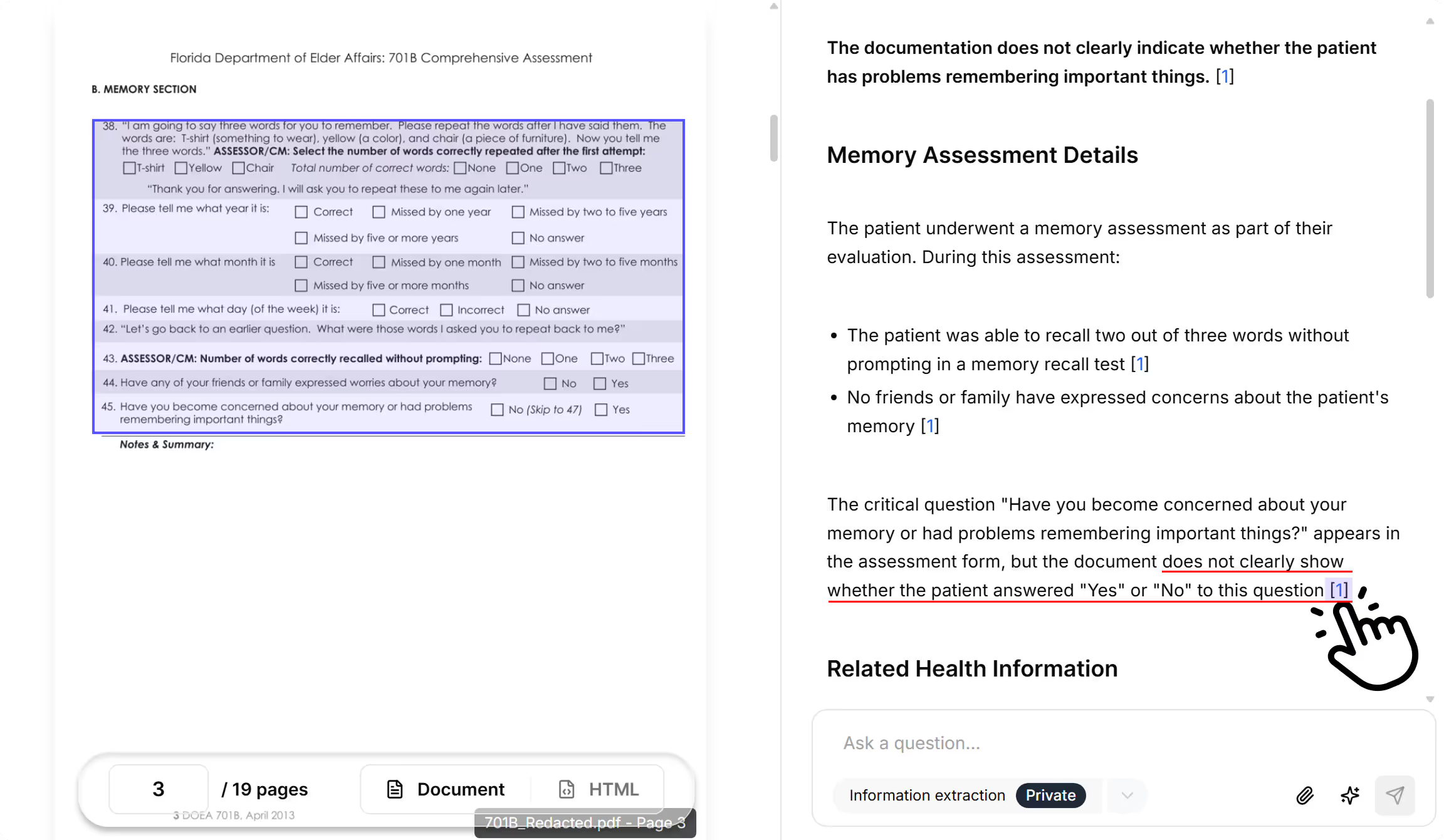

A. While traditional LLMs say "this is probably correct" based on guesswork, Upstage AI responds with "this information is on page 3 of the document" or "this information is not present in the document." Instead of guessing, Upstage AI Space grounds every answer in the source document. If the information is there, we cite the exact page and section. If it isn’t, we say so clearly—so healthcare and insurance teams can trust the results.

Q. What makes this different from other AI solutions?

A. We don't use OpenAI API wrappers. Instead, we built our own models from the ground up, specifically optimized for healthcare and insurance domains. Fortune 500 companies have already validated this reliability. We maintain complete control over cost, quality, and accuracy.

Q. How do you guarantee accuracy?

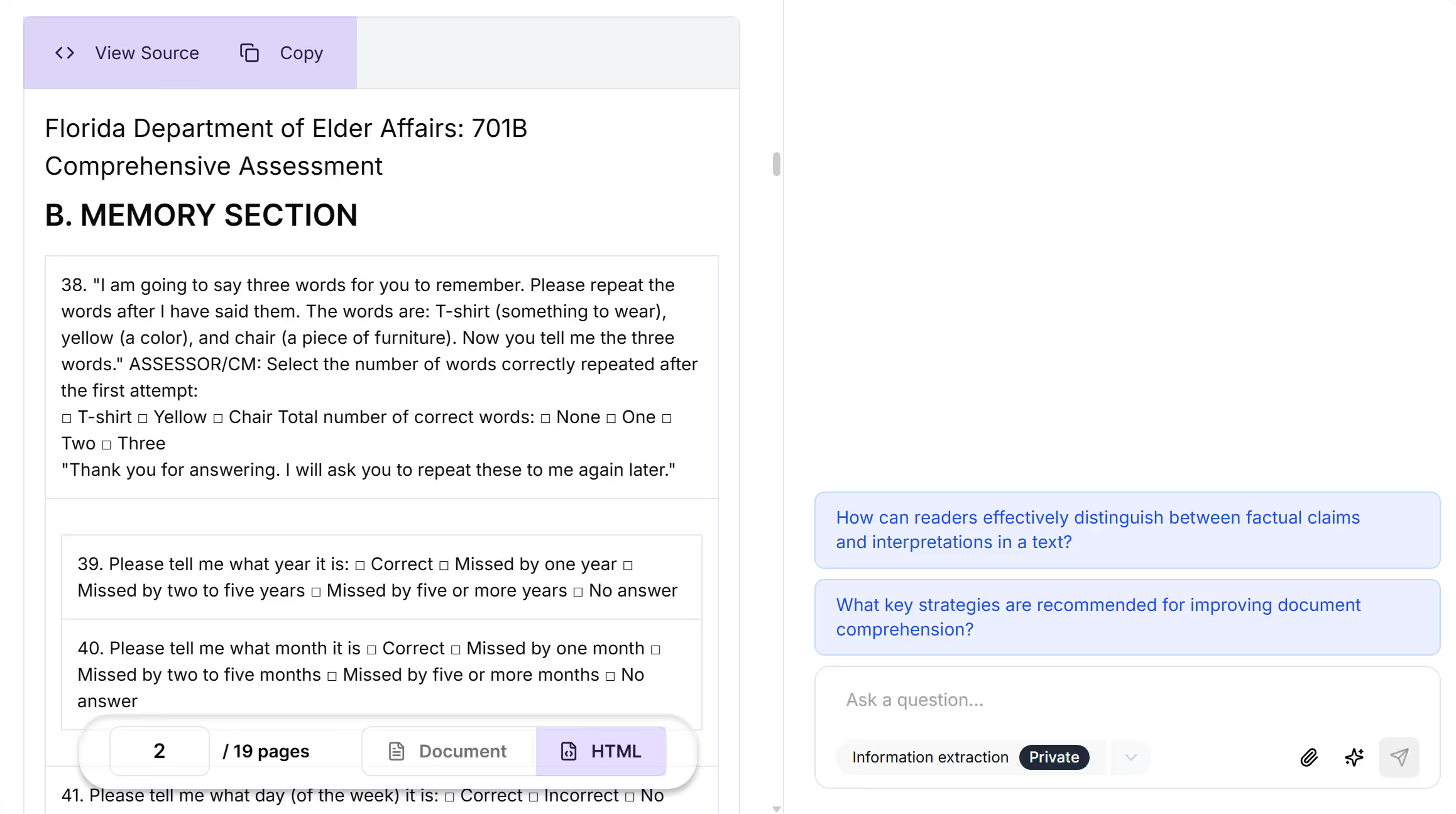

A. Our precision coordinate-based citation system provides exact sources for every answer. We can accurately recognize even individual checkboxes, converting documents into HTML structure for section-by-section precise analysis. Every piece of information comes with page numbers, sections, and coordinates.

Try AI Space — upload a document and see evidence-linked answers in seconds

See how 19 pages turn into structured data in 5 seconds

We uploaded that same 19-page patient assessment to Upstage AI Space. Within 5 seconds, we had accurate answers, also got HTML version of PDF. What would take medical professionals dozens of minutes to read through was analyzed instantly—without fabricating any missing information.

*Tested on internal environment; latency varies by file size, concurrency, and deployment.

This technology is already deployed by multiple Fortune 500 companies and handles 60% of life insurance policy administration in Korea. In our customer evaluations and internal tests, hallucination hasn’t been observed when answers require a matching source span and coordinates are enforced.

In healthcare and insurance, accuracy isn't optional—it's essential. Upstage is setting the new standard with hallucination-free AI.

Your workflow could transform like this too. Ready to see it in action with a free demo?